Northwest Airlines,

Senior Consultant,

1991 April through 1995 October.

Airline Booking Simulation: In anticipation of new yield management

technology, I was asked to write a computer simulation of airline

booking from first listing of flights through day of departure.

Starting with a single hub-and-spoke "complex" of inbound and

outbound flights, I was able to expand the simulation to the entire

Northwest Airlines network, thousands of flight legs and 100,000

connections.

Enhanced Network Value Indexing for Yield Management: The booking

simulation needed a new booking policy to test, so I came up with a

leg-based "displacement cost" strategy we called ENVI which became

the airline's yield management system from 1993 to 2006 where it

earned about $30 million per year in incremental revenue within

the constraints of the existing forecasting and reservations systems.

I designed, documented, developed, and tested the simulation and ENVI.

Delay and Cancellation Reporting System: Starting with delays for

eight international cargo airplanes, my delay-tracking system grew to

manage delay tracking for the entire airline. I was able to present

back-tracking of delay causes in a format familiar to managers using

legacy systems. My software allows the airline to calculate

"allocated" delay times from root causes including their downline

consequences.

Jet Engine Reliability Study: When high engine removal rates were

plaguing one of our engine types, I was asked to do a statistical

regression to see which components of our watchlist were meaningful.

After trying sophisticated "logit" models, I found simple linear

techniques were more revealing and was able to find significant

behavior differences between sub-classes of this engine type.

Scheduling Tools: I started my scheduling-support work with a

general connection builder to derive routes passengers might

reasonably fly from origin to destination. That connection builder

was used to bid for military transport contacts and evolved into a

true connection builder with all the hub, customs, and ground-time

constraints. I also built schedule-comparison and weekend-exception

programs that are still in use fifteen years later.

AT&T Bell Laboratories,

Member of Technical Staff (MTS),

1982 April through 1991 March.

Medical Site Selection: Using United States census data and

doctor office location data from SK&A, we built a database of

quarter-mile "bins" with doctors (servers) and population (users).

I designed and developed a program that used the Huff model from

retail and some nice computation tricks to generate a "heatmap" with

a patient-count estimate for each bin based on proximity and other

attractive attributes including being near a freeway or near a

hospital. I worked with my associate to build a more-robust database

using open-source PostgreSQL with more precise data from the census.

We have one client so far and he's happy with the report.

Medical Site Selection: Using United States census data and

doctor office location data from SK&A, we built a database of

quarter-mile "bins" with doctors (servers) and population (users).

I designed and developed a program that used the Huff model from

retail and some nice computation tricks to generate a "heatmap" with

a patient-count estimate for each bin based on proximity and other

attractive attributes including being near a freeway or near a

hospital. I worked with my associate to build a more-robust database

using open-source PostgreSQL with more precise data from the census.

We have one client so far and he's happy with the report.

CDMA Capacity Study: InterDigital is one of the technical pioneers

for Code Division Multiple Access, the up-and-coming mobile telephone

technology in the United States. At this time CDMA was an untested

theory and nobody really knew its capacity in a dynamic cellular

telephone system. There were "static" equations for steady state

which I was able to extend using linear algebra and sophisticated

numerical techniques ("computing tricks") to get the first handle on

the dynamic capacity and blocking rate of a CDMA system.

CDMA Capacity Study: InterDigital is one of the technical pioneers

for Code Division Multiple Access, the up-and-coming mobile telephone

technology in the United States. At this time CDMA was an untested

theory and nobody really knew its capacity in a dynamic cellular

telephone system. There were "static" equations for steady state

which I was able to extend using linear algebra and sophisticated

numerical techniques ("computing tricks") to get the first handle on

the dynamic capacity and blocking rate of a CDMA system.

This was a cool job. Three of my engineering buddies and I decided

to go into the printed-circuit-board-routing business. Two of them

wrote a graphical editor (programmer friendly, user hostile). One of

them wrote tools, built test cases, and handled technical support.

I designed and developed an autorouter that would take a schematic

network (called a "rat's nest" from its graphical network display)

and route printed-circuit-board traces with output for manufacture.

Besides doing straight "hug the traces" routing, both 45-degree and

orthogonal, my router did L-shape and C-shape routes with intra-layer

"vias" and used ripup-and-retry to find trace combinations.

Our product was never popular, but there was a small, devout

following in six continents because of its capabilities.

This was a cool job. Three of my engineering buddies and I decided

to go into the printed-circuit-board-routing business. Two of them

wrote a graphical editor (programmer friendly, user hostile). One of

them wrote tools, built test cases, and handled technical support.

I designed and developed an autorouter that would take a schematic

network (called a "rat's nest" from its graphical network display)

and route printed-circuit-board traces with output for manufacture.

Besides doing straight "hug the traces" routing, both 45-degree and

orthogonal, my router did L-shape and C-shape routes with intra-layer

"vias" and used ripup-and-retry to find trace combinations.

Our product was never popular, but there was a small, devout

following in six continents because of its capabilities.

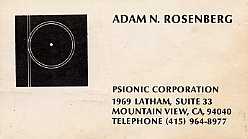

The Psionic Corporation,

1980 January through 1983 July.

The Psionic Corporation,

1980 January through 1983 July.